We provide support for UM faculties/research groups and support staff, as well as for UM individual researchers.

Responsible metrics

At Maastricht University Library, we are committed to the responsible use of metrics. We believe that research intelligence should support, not replace, qualitative evaluations. Analyses should be based on a careful selection of indicators and methods tailored to the particular goals of the evaluation.

Research intelligence should be question-driven, in other words: measuring what you want to know, rather than measuring what we can measure. Excellence and performance are multidimensional concepts of which metrics can capture only some aspects.

Developments like the signage of the San Francisco Declaration on Research Assessment (DORA), Leiden Manifesto, Strategy Evaluation Protocol 2021-2027 (SEP) and Recognition & Rewards all concern responsible metrics. What all these initiatives have in common is:

- Greater flexibility in the aspects on which both scientists and research units can be assessed;

- Use a narrative supported by responsible metrics instead of focusing on excellence (and thus competition) and metrics like the JIF or the H-index.

DORA

On October 25, 2019, rector magnificus Rianne Letschert signed the DORA declaration on behalf of UM.

In line with DORA, the Research Intelligence team does not use the Journal Impact Factor (JIF) or other journal-based metrics to assess individuals or individual publications.

Furthermore, DORA states that consideration should be given to the value and impact of all research outputs (including datasets and software) in addition to research publications, and that a broad range of impact measures should be considered, including qualitative indicators of research impact, such as influence on policy and practice.

For organizations that supply metrics, such as the Research Intelligence team, DORA states that they:

- Be open and transparent by providing data and methods used to calculate all metrics.

- Provide the data under a license that allows unrestricted reuse, and provide computational access to data, where possible.

- Be clear that inappropriate manipulation of metrics will not be tolerated; be explicit about what constitutes inappropriate manipulation and what measures will be taken to combat this.

- Account for the variation in article types (e.g., reviews versus research articles) and in different subject areas when metrics are used, aggregated or compared.

The Research Intelligence team guarantees these principles as follows:

- The data and methods used are described in justification/method of reports;

- Pure and InCites data are available, and use of the Dashboard guarantees reproducibility;

- We check how analyses or figures we deliver are included in the final report and what purpose journal-based metrics are requested. Journal-based metrics are not provided for the assessment of individual scientists or individual articles.

- Normalization takes into account the variation in article types. Furthermore, the Dashboard has the functionality to automatically include only reviews and articles for the InCites numbers to prevent strange outliers. There is also a filter for peer-reviewed publications on the Open Access tab in the Dashboard.

Leiden Manifesto

The Leiden Manifesto contains 10 principles that are intended as a guideline for research evaluation.

The following principles from this manifesto are relevant to our Research Intelligence services:

- Quantitative evaluation supports qualitative assessment by experts;

- Measure performance against the research missions of an institute, group or researcher;

- Give those who are being evaluated the opportunity to verify data and analyzes;

- Take into account variations between disciplines in publication and citation practices;

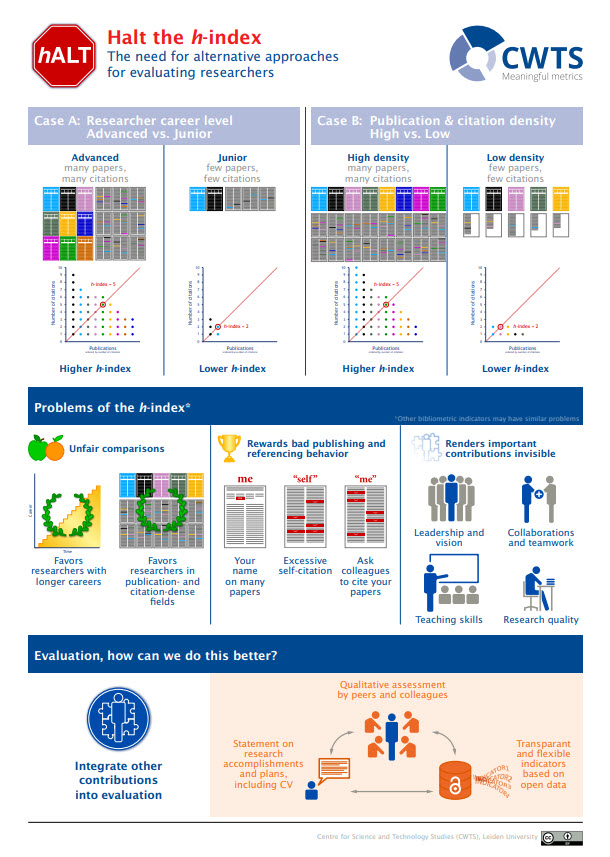

- Base the assessment of individual scientists on a qualitative assessment of their portfolio (and not on their H-index);

- Prevent misplaced concreteness and false precision;

- Recognise the effects assessments and indicators have on the system;

- Regularly review the indicators used and update them where necessary.

Why not the Journal Impact Factor (JIF)?

The Journal Impact Factor (JIF) is frequently used as the primary parameter to compare the scientific output of individuals and institutions.

The Journal Impact Factor was created as a tool to help librarians identify journals to purchase, not as a measure of the scientific quality of research in an article. With that in mind, it is critical to understand that the Journal Impact Factor has several well-documented deficiencies as a tool for research assessment.

These limitations include:

- Citation distributions within journals are highly skewed;

- The properties of the Journal Impact Factor are field-specific and therefore not comparable between fields: it is a composite of multiple, highly diverse article types, including primary research papers and reviews;

- Journal Impact Factors can be manipulated (or “gamed”) by the editorial policy;

- Data used to calculate the Journal Impact Factors are neither transparent nor openly available to the public.

Source: https://sfdora.org/read

For more information on the limitations of the Journal Impact Factor as a tool for research assessment:

- Adler, R., Ewing, J., and Taylor, P. (2008) Citation statistics. A report from the International Mathematical Union.

- Seglen, P.O. (1997) Why the impact factor of journals should not be used for evaluating research. BMJ 314, 498–502.

- Editorial (2005). Not so deep impact. Nature 435, 1003–1004.

- Vanclay, J.K. (2012) Impact Factor: Outdated artefact or stepping-stone to journal certification. Scientometric 92, 211–238.

- The PLoS Medicine Editors (2006). The impact factor game. PLoS Med 3(6): e291 doi:10.1371/journal.pmed.0030291.

- Rossner, M., Van Epps, H., Hill, E. (2007). Show me the data. J. Cell Biol. 179, 1091–1092.

- Rossner M., Van Epps H., and Hill E. (2008). Irreproducible results: A response to Thomson Scientific. J. Cell Biol. 180, 254–255.

Why not the H-index?

Why not the Altmetric Attention Score or Research Interest score?

The use of composite indicators, although relating to individual publications, is also strongly discouraged. Composite indicators, such as the Altmetric Attention Score or the Research Interest score of ResearchGate, cannot be interpreted meaningfully. E.g., the Altmetric Attention Score is a weighted count of all of the online attention found for an individual research output. This might include different forms of attention, all weighted in a different way. In other words, two different outputs might have the same Altmetric Attention Score although the forms of attention and the number of times this has occurred could be completely different. The same applies to the Research Interest Score of ResearchGate using multiple publication metrics — reads, recommendations, and citations – to calculate a weighted score.[1] Composite indicators are no measures of research impact of any kind.

The Altmetric Attention Score, shown in the so-called donuts, one should never use as such, as it is a composite indicator. The Attention Score can be used to navigate to outputs with a lot of attention, worthwhile to investigate further, and is best used by individual researchers to understand the overall volume of attention that research has received online.

[1] For the calculation see: https://explore.researchgate.net/display/support/Research+Interest+Score

Pitfalls when looking for indicators

Using raw counts (citations, mentions, views etc.)

Raw counts are difficult to interpret when a benchmark is missing to which the counts could be compared. Benchmarks necessary to interpret raw counts only exist for citations to articles, reviews, books, or proceedings papers indexed in the Web of Science or Scopus (See: normalized citation indicators subject guide).

Nevertheless, many scholars consider citations a measure of influence amongst scholars. Especially in journal-oriented disciplines, whose journals have a good coverage in the Web of Science or in Scopus.

Usage data (counts) are no direct measures of impact, or even a measure for usage itself when views or downloads are concerned. Viewed research outputs or downloaded ones, are not necessarily read, used or cited, and can be manipulated easily. (See also views and downloads)

Usage data, however enable one to look for research outputs – besides pointing to outputs with citations, downloads, views, or online attention that one might not be aware of – that could be worthwhile to investigate further to discover who is viewing, downloading, saving, reading, discussing, using, or citing your research output, for what reason and how often. The identity though, especially behind views, downloads or an online mention may not always be available because some sources do not display these, or because the ‘user’ did not create a personal profile for the source.

Finding benchmarks yourselves

When using raw counts, only mention those that are exceptionally high and try to find benchmarks yourselves, keeping in mind to compare like for like and report why the chosen benchmark is feasible or fair to use, and report the platform where the counts stem from and the date when retrieved. This is easier said than done, especially for datasets, software, or other outputs where consensus on citation practices is lacking and for which only usage indicators like views or downloads exist.

Support for UM faculties/research groups and support staff

We provide our support in the context of a strategy evaluation protocol (SEP) or on a question-driven basis.

While research evaluations for the SEP are part of the university library’s general service for all UM faculties, charges may apply for other analyses, depending on the scope of the question. To find out more, please get in touch with us via research-i@maastrichtuniversity.nl.

If you are affiliated with FHML, please contact Cecile Nijland of the FHML office first.

Research evaluations for the (SEP)

The Research Intelligence team supports UM faculties or research units in preparing periodic research evaluations based on the Strategy Evaluation Protocol 2021-2027.

Together with the unit under assessment, we decide on suitable indicators that fit the unit’s aims and strategy. These indicators may allow for accounting of past performance and strategic decision-making for the future. Please get in touch with us well in advance for your unit’s SEP (midterm) evaluation.

UM Research Intelligence Dashboard

The Dashboard provides insights by linking the research output registered in Pure to data from sources such as InCites, Web of Science, Unpaywall and Altmetric. It offers the possibility to apply various filters directly to the available data so that you can easily adapt the arrangement of the data and the level of detail to your needs.

Access to and use of the Dashboard is on request. A dataset is prepared, and access is provided to the particular dataset in the Dashboard via authentication.

Other analyses and/or visualisations

The data and plots in the Dashboard can be regarded as our standard service, especially aimed at research evaluation for the Strategy Evaluation Protocol (SEP). Your evaluation may require additional analyses and/or visualisations. Depending on the nature and scope of additional services, costs may be charged for this.

Alternative metrics

Altmetric is one of the platforms that gather alternative metrics, or for short ‘altmetrics’: a new type of metrics that aims to capture the societal impact of research output instead of traditional metrics such as citation counts.

As altmetrics are changeful and never fully captured by any database, we recommend refraining from focusing on the numbers. It can be a valuable source to identify who is building upon certain research work commercially and politically and to explore the general public’s sentiment.

Altmetric provides free data under certain restrictions. It also provides a paid platform called the Explorer for Institutions (EFI). This Altmetric Explorer allows the user to browse data by author, group, or department. As of July 2020, the UM has a license for the Altmetric Explorer, allowing us to make Altmetric data available on the ‘Social attention’ tab in the Dashboard. This Altmetric Explorer can be accessed by UM employees using their institutional e-mail address and password.

When using Altmetric data for evaluation purposes, be cautious as the quantitative indicators support qualitative, expert assessment and should not be used in isolation (see: Social media metrics for new research evaluation and The Leiden manifesto for research metrics).

For any guidance on using Altmetric responsibly, please get in touch with the Research Intelligence Team via research-i@maastrichtuniversity.nl.

Examples of faculty and research group questions

- How can non-bibliometric sources, such as news and policy documents be studied?

- Which publications address topics relevant to one or more of the UN’s Sustainable Development Goals (SDGs)?

- What is the position of the research work to work in the subject area?

- Which scholars are potential collaborators (inside/outside UM)?

- Which journals are suitable to publish certain research in?

- How strong/productive is the collaboration with other research units?

- What academic and/or societal impact did the research have?

- How can potential reviewers for a paper/proposal be recommended?

- What are the frequently investigated topics by a certain research unit?

- What were the main accomplishments in terms of research impact over a certain period of time?

- Which opportunities to increase impact are potentially neglected?

- Who are the co-authors of a certain researcher?

- What is the position of a certain research unit in relation to comparable research units?

- What is the position of our work in relation to our strategy?

- What could be the most strategic choice in terms of funding application, based on the previous impact of our research?

- Which journals do the researchers of a certain research unit usually publish in?

- What could be a suitable publication strategy based on the vision and mission of the research unit?

- Which research areas are potentially neglected?

- What are inspiring best practices that have gained frequent attention?

- Which researcher fits a certain profile based on their research outputs?

- How does a certain candidate compare to other candidates in terms of network and/or impact?

Support for individual UM researchers

There can be various reasons why as a researcher you want to gain insight into the (potential) impact of your work. For example when applying for a grant, when preparing for an evaluation meeting with your supervisor/manager, or to support a narrative about the impact of your work on your profile page or personal website.

The services of the Research Intelligence team can help you to gain this insight. To find out more, have a look at the items on this webpage or get in touch with us via research-i@maastrichtuniversity.nl.

If you are affiliated with FHML, please contact Cecile Nijland of the FHML office first.

Subject guides & workshops

We provide more detailed information on impact-related issues through subject guides and workshops.

Have a look at these guides or workshops if you want to learn more about research impact and what you can do to improve the (potential) impact of your research work.

Subject guides

- Select the right journal for your paper

- Increase your research impact

- Citations & Citation databases

- Altmetrics (alternative metrics)

- Normalized Citation Indicators

Workshops

Toolkit Societal impact

As one of the results of a strategic partnership between Springer Nature and The Association of Universities in the Netherlands (VSNU), a toolkit has been created to help you understand how other researchers view societal impact and how they have been successful in creating it.

It is filled with plenty of advice and insights from researcher interviews, as well as further reading resources to help you find out more about societal impact and how to create it for your own research.

Guiding discussions on career goals, workload, leadership and team science

A tool that can help guide discussions around research career goals, workloads and leadership/collegiality, is the Research Careers Tool developed by the University of Edinburgh, and designed to:

- map research-related activity and, across some dimensions, explore what next steps might involve,

- help researchers, in conversation with their peer groups, mentors and line managers, to be more strategic and selective in what they take on,

- capture the research-related component of academic staff workloads (not teaching, or administration, or more general leadership activities).[1]

Given the focus on selecting and prioritising activities, the Tool may have a more limited value for post-graduate researchers, research assistants or post-doctoral research fellows with a clear set of allocated tasks. In both cases, there will be less scope for selecting which research activities to focus on. However, for both of these groups, the Tool may be useful for reflecting on future career plans.

To uncover these strengths, points for development, and ambitions you might use self-assessment tools such as:

- https://myidp.sciencecareers.org/Home/About

- https://www.euraxess.ie/ireland/researcher-career-development-tools-supports

[1] The focus on research is justified in the description of the Research Careers tool by pointing out that this is the area over which academics typically enjoy most autonomy but also where they typically feel the most pressure to perform.

Alternative metrics

Altmetric is one of the platforms that gather alternative metrics, or for short ‘altmetrics’: a new type of metrics that aims to capture the societal impact of research output instead of traditional metrics such as citation counts.

As altmetrics are changeful and never fully captured by any database, we recommend refraining from focusing on the numbers. It can be a valuable source to identify who is building upon certain research work commercially and politically and to explore the general public’s sentiment.

Altmetric provides free data under certain restrictions. It also provides a paid platform called the Explorer for Institutions (EFI). This Altmetric Explorer allows the user to browse data by author, group, or department. As of July 2020, the UM has a license for the Altmetric Explorer. This Altmetric Explorer can be accessed by UM employees using their institutional e-mail address and password.

When using Altmetric data for evaluation purposes, be cautious as the quantitative indicators support qualitative, expert assessment and should not be used in isolation (see: Social media metrics for new research evaluation and The Leiden manifesto for research metrics).

For any guidance on using Altmetric responsibly, please get in touch with the Research Intelligence Team via research-i@maastrichtuniversity.nl.

Examples of researcher questions

- How can non-bibliometric sources, such as news and policy documents be studied?

- Which publications address topics relevant to one or more of the UN’s Sustainable Development Goals (SDGs)?

- What is the position of the research work to work in the subject area?

- Which scholars are potential collaborators (inside/outside UM)?

- Which journals are suitable to publish certain research in?

- How strong/productive is the collaboration with other research units?

- What academic and/or societal impact did the research have?

- How can potential reviewers for a paper/proposal be recommended?

Evidence-Based Narrative CV

A support page has been established as a means to guide academics in writing an evidence-based narrative CV for grant proposals or the UM career compass. A narrative CV is a description of the candidate’s academic profile that leaves room for the individual academic’s special qualities and captures a much wider range of contributions, skills, and experiences than traditional, publication or output-focused CV. Within a narrative CV, quantitative and qualitative indicators or other special or valuable characteristics of academic output can be used as evidence of academic or societal impact. Which indicators to use and which aspects of your academic profile to highlight depends on the assessment context, your discipline (typical outputs and activities), your personal situation, or on the specifics of the research project for which a grant is applied.

Go to the support page Evidence-Based Narrative CV

My Research Intelligence Profile

This application provides UM researchers with indicators of the academic and societal impact & open access status of their research outputs registered in UM’s research information system Pure. The indicators can be used as support for a qualitative evaluation or narrative for grant applications, the UM Career Compass, promotions and more. In accordance with the requirements of Dutch funders and UM policy, the application shows article-level indicators (e.g. CNCI) as opposed to journal-based indicators (e.g. JIF).

Please note that this information is only available for yourself.

If you need help to get started with your grant proposal, contact your UM funding advisor.